How to design a scalable web application

Episode #17: What are the best practices to keep in mind when designing your first web application so that you can later scale to millions of users?

This article will cover:

(free section) Minimum viable product: web app + database

(💰 paid) Think big, but start small: Vertical scaling -> Horizontal scaling

(💰 paid) Non-functional requirements: Observability and Infrastructure as Code.

(💰 paid) Horizontal scaling: Load Balancers and HTTP protocol

(💰 paid) Monolith vs Microservices: which pattern is better to start with

(💰 paid) Scaling the state: Federation, Sharding, Read replicas

As in previous articles, what you learn here is not limited to system design interviews but can be used in your daily job.

This article explicitly targets junior developers as it sets a foundation for future advanced articles. There are still quite a few lessons that a more experienced developer can learn from it.

Minimum viable product

We are going to start by designing the most simplistic web application.

The pattern described here is the foundation of most of the web application these days. Think Netflix, Spotify, and LinkedIn scale. Even if some of those services have native apps, behind the scenes, they still communicate with a web application similar to the pattern described in this article.

Most of the web applications are composed of two distinct layers:

a stateless web layer

a storage layer for the state

There is quite a lot to talk about in designing a web application, so we need to discuss what is in scope and what is out of scope for this article.

What is out of scope:

How to implement this architecture in the cloud

Computer Networking

SQL vs NoSQL database

Containers, Kubernetes, Serverless, or any other way to run your application

Components in the design:

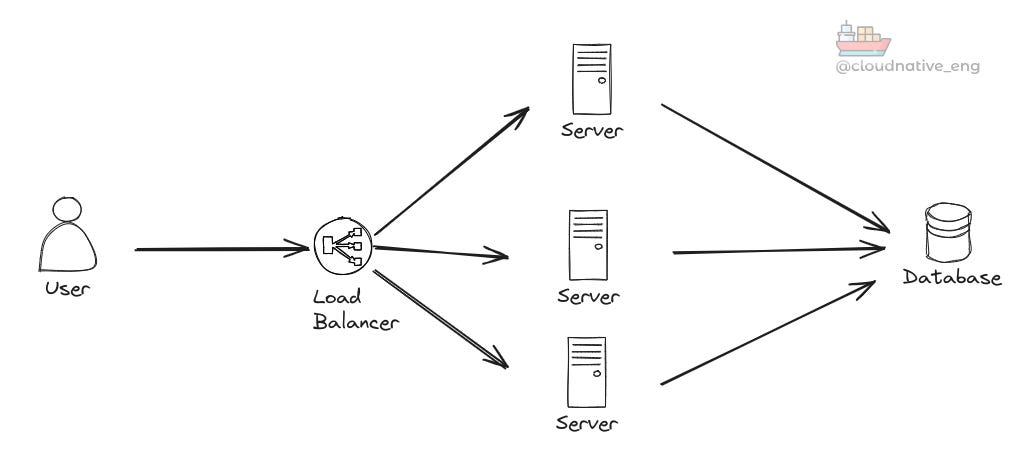

We will use a simple relational database.

Two or more web servers running a REST API that returns a JSON object.

A Load Balancer to distribute the request load between the web servers.

This is it.

Most of the complexity is in scaling and optimising the storage layer. We could write multiple articles about that alone. We will only mention a few techniques here since we won't have time to go deeper.

Think big, but start small

Many articles on system design suggest deploying the web app and the database on the same machine as a first step. Then, later, when the load increases over time, moving to a larger machine.

Vertical scaling, which is what this practice is called, can become quite expensive and only scale up to a certain point. I don't have actual numbers at hand, but I guess you can only scale up to hundreds or, at best, to thousands of users.

The single-machine approach is a false start, in my opinion. It's a waste of time at best. It only works for prototypes or for hacking on a weekend.

Whether you are in an interview or designing such an application in real life, you want to create an application that can scale massively, well beyond thousands of users.

You should think big as if you were designing Facebook or Netflix.

But you also want to start with the simplest solution that supports your requirements.

How can you combine these two apparently opposite approaches? How can you start small but still prepare for a massive scale?

You should only introduce new components and design concepts only when necessary.

Across this article we will give some suggestions to guide you in this process.

If designing a massive web application is not what you have in mind, this article is not for you. You can stop reading now.

Are you still here? Great. Let's dive in.

Non-functional requirements

What I described with the minimum viable product should be enough if you are in a system design interview.

If you are creating an application that needs to scale to millions of users, it's crucial to consider two critical non-functional requirements from the beginning: Observability and Infrastructure as Code.

An in-depth discussion about either of those topics might fill entire books; here, we just discuss the need for them and why you might want to implement them from the beginning.

Observability will tell you what to prioritise.

Should you use read replicas for your database? Do you need a caching layer? Should you switch HTTP for GRPC or GraphQL? Those are all questions you should be able to answer with proper Observability in place.

To implement Observability, you need to combine Infrastructure Observability with Application Observability. The first depends on your cloud provider or what you implement yourself if going bare metal. The latter requires instrumenting the code to generate logs, metrics and distributed traces.

Your Observability strategy should evolve with your application and the user load.

An early investment in Infrastructure as Code always pays back if you plan to keep the application running for the long term and adapt to the various challenges that come with going viral.

You want to use code to deploy your infrastructure so that you can quickly adapt to changes in the design and customer loads or even avoid repetitive operations.

For example, what if I need to duplicate your setup in another cloud region? What if you want to scale up and down your infrastructure with the seasonality of your application? For example, most of your traffic might be served to the other side of the planet, and you don't want an engineer to stay up at night to scale your application manually.

Horizontal scaling

As discussed in the previous sections, vertical scaling is never a solution in an actual interview or, most importantly, real life.

Horizontal scaling, instead, is when you decide to use multiple machines of similar size and distribute the load between them. This approach is preferable in the long term and cheaper, too.

From a previous article titled Decoding HTTP: Networking Fundamentals for System Designers, we learned that the HTTP protocol is the perfect candidate to implement the stateless web layer.

HTTP protocol is easy to implement, stateless by nature, and widely supported by programming languages. Many libraries can allow you to write REST APIs to implement our web application.

Once you decide to use horizontal scaling, the next thing is to have a Load Balancer as a single point of entry for your application.

Load Balancers provide many benefits:

Security: you can create an SSL certificate and set up HTTPS between the client and the Load Balancer, leaving the rest of the traffic with your backend servers to HTTP. This will make certificate management much easier and keep your application secure.

API gateway: A load balancer can implement caching, data compression, request routing, and much more, similar to an API gateway.

Maintainance: If you use a load balancer, you can easily conceal that one of the backend servers is out of service. You can proactively take one server out of the cluster for backup or maintenance purposes. Your users won't notice any difference, and the system will remain functional.

I suggest implementing some form of automatic scaling from the beginning; you never know when your application might become viral.

These days, most cloud providers make it easy to increase the number of machines in your computing cluster according to a metric like CPU or request counts. Having this in place from the beginning will keep your application running even in case of usage spikes.

Monolith vs Microservices

What is a monolith application?

This article won't discuss microservices architecture. Starting a new application with microservices in mind is overkill unless you rewrite a legacy application.

We can only think of splitting the monolith into multiple microservices when we get enough subscribers to need various teams of 5-7 people each.

Then, we would know enough about our application's architecture to draft clear boundaries for our microservices and scale them independently.

Scaling the state

As mentioned, the most challenging part of scaling your web application is scaling the database.

There are two main areas for scaling. Depending on the nature of your application, you should optimise for reads or writes.

Optimising the writes can be achieved with Sharding and Federation.

Federation is when you decide to split your database tables into different databases. This might allow you to scale each table independently, but it has the side effect of preventing joins between tables.

Sharding is when you distribute the data in the database table (or logical unit) across multiple servers or partitions, each called a shard. Sharding is not limited to the databases; Elasticsearch implements sharding for its indices.

Optimising the reads has a lot more options.

You can add a caching layer in various parts of the architecture—the web app, as an external in-memory distributed cache or in the database cache.

Another technique for optimising your reads is called read replicas. With Read replicas, when data is written to a database, updates are replicated asynchronously to other databases. Writes to the database are routed to the primary database, while reads are served by the replicas.

Want to connect?

👉 Follow me on LinkedIn and Twitter.

If you need 1-1 mentoring sessions, please check my Mentorcruise profile.